This is the third and concluding article of the Circuits in Session series. The quality of GPT4’s legal analysis is evaluated and both positive and negative test results are reported. It did process legal frameworks very well but struggled with nuanced understanding of facts and equity—a significant limitation for real-world applications. The results of the experiments are encouraging to those who hope to use generative AI as a legal tool. Someday AI may even take over some tasks performed by human judges, if not replace them entirely, starting with appellate judges, but not today.

Lady Justice in future with AI enhancements. Image by Ralph Losey plus AI.

Lady Justice in future with AI enhancements. Image by Ralph Losey plus AI.

The first two articles in this series on AI as an Appellate Court Judge are: Circuits in Session: How AI Challenges Traditional Appellate Dynamics (10/13/23); Circuits in Session: Addendum and Elaboration of the Appellate Court Judge Experiment (10/25/23). The two experiments discussed in this series demonstrate impressive legal reasoning abilities. But the results did not support the hypotheses that GPT4 could serve as an appellate court judge in some cases. Note the experiments did not test legal research ability, only the ability to analyze and make just decisions.

The evidence showed GPT4 was good, but not great, and that means it was inadequate for the job. AI should only be used to improve the quality of dispute resolution, to uplift the bench, not lower the bar. Justice is critical to the proper functioning of society and should never be automated just for efficiency and economy. The conclusion elaborates on these thoughts and provides a vision of how generative AI may someday be used to uphold the quality and integrity of legal systems throughout the world.

High-Level Overview of Experiment Results

My hypothesis of the competence of GPT4 to serve as an appellate judge was not confirmed to my satisfaction because:

- Significant technical challenges were encountered in the use of ChatGPT4 to adjudicate disputes; and,

- Quality deficiencies were also encountered; the legal analysis, although good, was not great, certainly not on par with the top appellate court judges.

The first functional type of challenges can probably be fixed in just the few years. The second, however, which hinges on its correction of deficiencies of fact and equity evaluation, is much more challenging. It is hard to predict how long it may take to fix that, if ever. In my admittedly idealistic, pro-human way, that means we should not consider replacing, or even supplementing, human judges, until the AI version is at least as good, if not better, than the best humans. AI must be a path to excellence, not just less expensive mediocrity.

Future Circuits Judge in session. Aligned and Certified. Image by Ralph Losey plus AI.

Future Circuits Judge in session. Aligned and Certified. Image by Ralph Losey plus AI.

Some believe the second type of challenge, the quality challenge, is forever beyond the ability of artificial intelligence. If so, that means that AIs can never be as good as human judges, even on the highly intellectual appellate level. Time will tell. Just remember, they used to say the same thing about Chess and then Go, etc. If you assume it is possible, then the question is how long it may take. That depends on whether the LLM AI models like GPT4 can keep improving at the exponential rate they have shown in the last two years. That again is a matter of debate. Some, including Bill Gates, think it is already at or near a dead end. Bill Gates does not expect GPT-5 to be much better than GPT-4, (Decoder, 10/21/23). Others think that GP4 and other generative AIs are still in the early stages of exponential improvements.

The bigger question is whether the continued improvement in reasoning, approaching super-intelligence in this one ability, will ever give GPTs a proper appreciation of the facts and equity of a case. The AI has no real feelings, and, as to facts, has only a secondhand, abstract experience of reality. Will super-reasoning ever make up for that?

Can Robots ever develop the compassion and other human qualities needed to do justice? Image by Ralph Losey and AI.

Can Robots ever develop the compassion and other human qualities needed to do justice? Image by Ralph Losey and AI.

I for one think that it might, but the experiments in Circuits in Session show it is not there yet. It is not ready to replace Appellate Court judges today, even where facts are not disputed, as was true in the case experimented with, Brandi McKay vs. Miami-Dade County, 36 F.4th 1128 (11th Cir. June 9, 2022). Still, the overall results are encouraging, very encouraging. GPT is already a cutting-edge tool for legal analysis, albeit still challenging to use. Despite the problems encountered, AI can, if used properly, serve as a powerful tool of justice. It can make the work of human judges easier and more efficient. Someday, perhaps soon, it may be able to appreciate all of the facts and equites of a case, just like our most sensitive, fair minded human judges. Then it could be of much greater service.

Judges can be helped by AI. Image by Ralph Losey plus AI of judge with AI tools.

Judges can be helped by AI. Image by Ralph Losey plus AI of judge with AI tools.

Critique of ChatGPT’s Judicial Analysis

Prediction of Outcome of the Appeal and Possible Dissent

I first asked GPT4 to predict the outcome of the McKay appeal. It correctly predicted the lower court’s opinion would be affirmed. A correct prediction is, in itself, solid proof of competence, but I was looking for much more. I wanted to know the details of its legal reasoning.

The lower court found that the “economic reality” of Ms. McKay’s relationship with the County indicated that she was the primary beneficiary. She gained valuable skills and networking opportunities, which is consistent with the purpose of an internship. The duration of the internship was also not found to be “grossly excessive,” further supporting the volunteer classification.

Redo – Revised Second Stage of the ChatGPT-4 Appeal Experiment (Losey, 10/15/23).

That is the core reason provided, and it is right on point, although sone of the other language in the GPT memorandum not quoted is not helpful, although not incorrect. So I give the reasoning a high score, but not perfect. I was known as a hard grader in law school too.

You could say the prediction of an affirmation is not too surprising, in view of the statistical rarity of reversals, even just partial reversals. It only happens in eight percent (8%) of all appeals. Edwards, Why Appeals Courts Rarely Reverse Lower Courts: An Experimental Study to Explore Affirmation Bias (68 Emory L. J. Online 1035 (2019)). Since generative AI works through probability predictions, you could explain the overall prediction that way, but not the on-point explanation as to why it would likely be affirmed. Plus, the statistical prediction of a dissent goes well against the odds. This indicates real legal analysis. It also confirms that GPT4’s score in the top ten percent (10%) of the multi-state Bar Exam was no fluke. Is it irrational exuberance to expect GPT5 to score in the top one percent (1%)?

In the second experiment GPT-4 predicted a 40% likelihood of dissent based on the assigned panel of Circuit Judges Jordan, Tjoflat and Pryor (Jill). Redo – Revised Second Stage of the ChatGPT-4 Appeal Experiment. In the first experiment it predicted the likelihood of a dissent at an even more remarkable 55%. Circuits in Session: How AI Challenges Traditional Appellate Dynamics (10/13/23).

This shows a complete break from mere statistical based predictions because in fact only 2.6% of the Circuit appeal cases have a dissent. Epstein, Landes, Posner, Why (and When) Judges Dissent, Journal of Legal Analysis, Spring 2011: Volume 3, Number 1 at pg. 106. Moreover, my study using Lexis indicates that none of the three judges on this panel are particularly prone to dissents. The judges again are Jill Pryor (appointed by Obama), Adalberto Jordan (appointed by Clinton) and Gerald Tjoflat. It is important to understand that Gerald Bard Tjoflat is a remarkable senior judge. He was appointed as a Circuit Appeals Judge by President Ford in 1975 and retired in November 2019. He was born in 1929 and served as a counter-intelligence investigator at the end of the Korean War. He is well-known as one of the countries’ great jurists, arising from my home court in the Middle District of Florida. In 1995, the Duke Law Journal published a tribute to Judge Tjoflat. Tribute to Gerald Bard Tjoflat, Duke Law Journal, Vol 44:985 (1995). It included articles by then Chief Justice William H. Rehnquist, retired Justices Lewis F. Powell, Jr. and Byron R. White, and Judge Edward R. Becker.

Illustration by Ralph Losey plus AI of an AI Robot Judge of the distant Future inspired by image of today’s Circuit Judge Tjoflat.

Illustration by Ralph Losey plus AI of an AI Robot Judge of the distant Future inspired by image of today’s Circuit Judge Tjoflat.

Judge Tjoflat’s participation in Brandi McKay vs. Miami-Dade County, 36 F.4th 1128 (11th Cir. June 9, 2022) was as a voluntary retired judge, since, like most courts in the U.S., they do not have enough active service judges to hear the court’s case load. The Eleventh Circuit is sometimes called one of the most conservative courts in the country. But see: The Eleventh Circuit Cleans Up the Mess (Lawfare, 09/22/22). If you were to pick an outcome along political lines, with little regard to legal reasoning, as sometime happens in other courts, you would predict a reversal here by Pryor and Jordan, against Tjoflat.

As mentioned in Circuits in Session: Addendum and Elaboration of the Appellate Court Judge Experiment (10/25/23), when prompted to guess which judge would be the most likely to dissent, it guessed, Judge Jill Pryor, “given her judicial philosophy and past rulings on labor issues.” That was the wrong guess, as the dissent was actually by Judge Adalberto Jordan. Based on my studies of the rulings of these judges in employment law, I suspect this is an error that many Eleventh Circuit employment law experts would have made, that many would have predicted Pryor over Jordan as a possible dissenter. See eg. Lewis v. City of Union City, 918 F.3d 1213, 1231 (11th Cir., 3/21/19) (Jill Pryor joined this unusually contentious dissent in summary judgment claims against city employee, whereas Jordan upheld ruling for employer); EEOC v. Catastrophe Mgmt. Sols., 876 F.3d 1273, 1279 (11th Cir., 12/5/17) (Pryor joined dissent and would grant en banc review of a denial of an employee discrimination claims, whereas Jordan upheld ruling). Villarreal v. R.J. Reynolds Tobacco Co., 839 F.3d 958, 973, 981 (11th Cir., 10/05/16) (Complex opinion where Jill Pryor joins in dissent to panel and favors employee in desperate impact discrimination case. Judge Jordan joins in separate, more limited dissent).

When asked to speculate as to why Judge Adalberto Jordan might object, the GPT response was again very good. I explained this before in the last article. Id. In the meantime, I have researched using Lexis the history of Judge Jordan’s opinions on employment law, and history of dissents. I found very few reversals and they are for remand to allow a jury to make a factual determination. See eg., Martin v. Fin. Asset Mgmt. Sys., 959 F.3d 1048, 1058 (11th Cir., 5/14/20) (Jordan dissents in part, and would reverse summary judgement and remand to jury for Title VII retaliation claim); Ojeda-Sanchez v. Bland Farms, LLC, 499 Fed. Appx. 897, 904 (11th Cir., 11/29/12) (Jordan dissents in part from ruling for employer in an FLSA case and would remand for a jury trial on a question of fact regarding time worked); Nelson v. MLB Hotel Manager, LLC, 2022 U.S. App. LEXIS 19298, 2022 WL 2733720 (11th Cir., 07/13/22) (Jordan affirms summary judgment for employer in an FLSA restaurant tip case. Opinion notable for the reprimand of counsel for misuse of sanction motions that “often transforms a goodfaith legal dispute into an ugly mudslinging battle“).

After digging deeper into the case law I am more impressed than ever regarding ChatGPT’s analysis of the dissent. Maybe someday its capabilities will be expanded to allow it to study all cases cited and to provide its own citations. This shows GPT4 is ready to be a partial tool, but not ready to replace an appellate judge’s law clerk, much less the judge. Still, a very strong initial showing by GPT4.

Appellate Opinion Submitted for first time and Detailed Summaries and Analysis Provided

In the next stage of the experiment, I showed GPT4 the actual Eleventh Circuit opinion for the first time and asked for its analysis. The opinion is very interesting on many levels. Brandi McKay vs. Miami-Dade County, 36 F.4th 1128 (11th Cir. June 9, 2022). GPT4 was asked to provide “a critical analysis of the majority opinion and any errors that were arguably made.” I thought the response was good and would have to agree with it, even though I agree with the lower court’s outcome granting summary judgment for the employer. Here is the AI’s critique of the majority opinion.

Memorandum on Critical Analysis of the Majority Opinion

- Overreliance on Precedent: The majority heavily relied on the “primary beneficiary test” from previous cases but did not adequately consider the unique circumstances of this case, such as the lack of an educational component in the FIPP.

- Economic Benefit to Employer Overlooked: The majority failed to consider the economic benefit Miami-Dade County derived from the program, which could have implications for how the FLSA is interpreted in the context of modern internships.

- Insufficient Addressing of Dissent: The majority opinion could be criticized for not sufficiently addressing the dissent’s arguments, particularly those related to the lack of educational value and the potential for exploitation in the FIPP program.

- Potential for Setting Precedent: The majority opinion, if left unchallenged, could set a precedent that allows employers to exploit interns under the guise of providing “training,” thereby undermining the purpose of the FLSA.

Redo – Revised Third Stage of the ChatGPT-4 Appeal Experiment (Losey, 10/15/23).

My only critique of GPT’s criticism is that it did not mention the summary judgment standard, and did not point out genuine issues of material fact. This was the bottom line criticism of the dissent, but GPT4 did not seem to pick up on that. Still, when I next asked about the dissent, it did became the AI’s lead point:

Emphasis on Jury Trial: One surprising element is Judge Jordan’s strong emphasis on the need for a jury trial to determine Ms. McKay’s employment status. This is a departure from the majority’s approach, which seemed content to make that determination at the judicial level.

Id.

So the AI got the point, but seemed surprised about it. Plus it was not specific regarding disputed facts requiring a remand. No law clerk or judge would be surprised or make this mistake in analysis of Rule 56. This error is an example of GPT’s current data training handicap. It did not have a complete collection of case law, like West, Lexis or even smaller case law data sets like Justica, CaseText (now part of West) and Google Scholar. This can be easily fixed by expanding its training database and or tying into existing legal databases, which both Lexis and West are now doing. The technology of justice is certain to continue improving.

Global Lady Justice in future. Image by Ralph Losey plus AI.

Global Lady Justice in future. Image by Ralph Losey plus AI.

AI Provides Opinion and Analysis of How the Lower and Appellate Court Should Have Ruled

Now I asked GPT4 the key question of how it thought the lower court should have ruled. It began by correctly identifying the central issue in this case as whether Brandi McKay “should be considered an “employee” under the Fair Labor Standards Act (FLSA) and thus entitled to compensation.” Good start. Lawyers and judges all too often fail to grasp the key issue. GPT4 then goes on provide its analysis.

Independent Judgment and Analysis

- Educational Component: The FIPP program lacks a structured educational component beyond the initial four weeks. This absence is significant because one of the key criteria for determining whether an internship is exempt from FLSA regulations is the educational benefit to the intern. The absence of ongoing educational value tilts the balance toward classifying Ms. McKay as an employee.

- Economic Benefit to Employer: Miami-Dade County derived substantial economic benefits from Ms. McKay’s work. She performed tasks that would otherwise have required paid staff, thus saving the county money. This factor strongly suggests an employment relationship.

- Supervision and Training: After the initial four weeks, Ms. McKay worked largely independently, without the kind of supervision one would expect in an educational setting. This lack of oversight further supports the notion that she was functioning as an employee.

- Duration of the Program: The six-month duration of the program, with the educational component largely confined to the first month, suggests that the primary beneficiary of the arrangement in its later stages was the employer, not the intern.

- Economic Realities Test: The “economic realities” of the situation indicate that Miami-Dade County was the primary beneficiary of the arrangement, particularly in the latter months of the program. This is a strong indicator that Ms. McKay should be considered an employee under the FLSA.

Redo – Revised Fourth Stage of the ChatGPT-5 Appeal Experiment (Losey, 10/15/23)

That analysis, excepting again the omission of the admittedly secondary Rule 56 summary judgment factor, is, unquestionably correct. These are key factors for a court to consider to determine if McKay is an employee. My disagreement is with how GPT4 weighed these factors, not with the factors used. Here is GPT4’s conclusion, its ultimate ruling in the McKay v. Miami-Dade County case.

Based on these factors, the correct holding of the district court should have been that Ms. McKay was an employee under the FLSA for the duration of her time in the FIPP program beyond the initial four-week educational period. She should be entitled to back pay calculated based on the minimum wage, as well as any applicable overtime pay.

The lack of a structured educational component, the economic benefits derived by Miami-Dade County, and the level of independence and responsibility assumed by Ms. McKay in her role all point toward her classification as an employee under the FLSA. Therefore, she should be entitled to compensation in accordance with federal labor laws.

Id.

Note the AI does not conclude McKay was an employee for the first four weeks, and so the reversal would only be partial. McKay had claimed she was an employee throughout, including the first month. Also, McKay quit after the fifth month and so obviously was not making a claim for the sixth month. The GPT Judge award would thus have been for only four months.

Next, I asked GPT4 how the Circuit Court should have ruled on the appeal, which, in terms of sophisticated legal analysis, is a slightly different question. The AI properly understood that the standard of review was part of appellate analysis and correctly determined that it was de novo. The GPT went on to reconsider the same factors and reached the same result under de novo review. Any other result at this point would have been inconsistent.

Based on this independent analysis, the correct holding of the appellate court should have been to reverse the district court’s decision and remand the case for a determination of damages. Ms. McKay should be classified as an employee under the FLSA for the duration of her participation in the FIPP program beyond the initial four-week educational period.

Id.

The AI ruling differs from the dissent because Judge Jordan wanted only a remand for a jury adjudication of the question of whether Brandi McKay was an employee. There is nothing wrong with that, I did ask the AI for its own independent analysis, and that is what it did. It did not just simply copy the dissent but went further in its ruling for the employee. In that sense it agreed with the majority and determined that the facts were undisputed enough for a court ruling.

Regarding the weight given to the factors, I agree with the majority, not GPT4, and think the six-month duration of the program is not too long, and that the economic realities showed that more, not less, benefit went to the employee. That is based on my “real world” experience with employment and with interns. No doubt that Judge Gerald Tjoflat, the author of the McKay opinion, was of the same opinion. Plus, I happen to know from my subsequent online research something that the AI did not, and apparently neither did the human judges, that McKay got a job with the Palm Beach County Medical Examiner’s Office. She was able to leverage her experience with Miami-Dade County to find employment with the nearby, smaller office in West Palm Beach.

I am not at all surprised. I doubt that Judge Tjoflat would have been surprised either. He was an investigator for the Army after being drafted at the end of his first year in law school. Interesting footnote, he had flat feet medical condition, which disqualified him medically from military service. He could have easily avoided the draft, but instead, he hid his disability and somehow made it through basic training so that he could serve.

There was no dispute in this case that the Miami-Dade Forensic Internship Photography Program is the only one of its kind in the country. Brandi McKay applied to the internship to gain invaluable experience. She was clearly told there would be no pay for her work at Miami-Dade. Her only alternative to obtain this kind of experience was by enrolling in private Barry University for another college degree. As everyone in Florida well-knows, Barry is expensive. The real-world consideration provided to Brandi McKay here was very strong. This is the basis of my agreement with the majority of human judges here, and disagreement with the AI judge.

The AI was, in my view, naive. It needs much more real-world information to be a wise judge. Apparently, this will come in future releases of ChatGPT.

Plus, you could question defense counsel somewhat here for not making a better record of Brandi McKay’s benefits, but you never know, perhaps that was done. Maybe all the favorable facts showing consideration to McKay were not quoted in defendant’s short brief, nor by any of the judges. Still, I doubt that. Perhaps McKay obtained new employment after she lost her case and so that could not have been discovered. What made her want to sue Miami-Dade anyway? I would like to read her deposition transcript. The Miami-Dade program taught her a trade, just as she had hoped. She knew she was not going to be paid when she enrolled. So why did she turn around and sue them? Does GPT4 even know to ask these questions?

I am sure the human judges do. They have seen a lot of things, a lot of unsavory people and many worthy plaintiffs too. Judge Gerald Tjoflat was 94 years old at the time he wrote the majority opinion in Brandi McKay vs. Miami-Dade County, 36 F.4th 1128 (11th Cir. June 9, 2022). He had served as a judge since 1968. There is no doubt that Judge Tjoflat, although not perfect, had great knowledge of the human condition. Far more than baby jurist GPT4.

Brandi McKay sued to try to get paid for a position that always clearly stated would be unpaid. She sued anyway. She had nothing to lose because her attorney almost certainly took the case on contingency. I have seen that scenario in employee claims many times. Maybe Brandi quit before finishing up her last month because she saw the writing on the wall, that she was not well liked, or maybe they did not think her job performance was as good as the other student. What we know from this limited record is that she quit after five months to look for work elsewhere and sued the program that gave her the chance to do that.

I am skeptical of the “economic realities” here. I am reluctant to rule against a government agency or private corporate entity offering a unique training program. Especially an agency that was very clear and up front that there would be no pay for this training and experience, but no high tuition charges either. Who was taking advantage of who in these circumstances? What were the real equities here? Brandi McKay got a free education and ended up with a good job nearby. I do not think that ChatGPT4 has enough real world experience to sense what was likely going on, not yet anyway. Perhaps in a future version it will see things differently and not be so naive. It is almost never black and white, but rather shades of grey. The human judges here, under the wise leadership of senior Judge Tjoflat, saw through the smoke and mirrors of the complaining plaintiff and made the right call.

Goth Girl in Anime Style by Ralph Losey plus AI.

Goth Girl in Anime Style by Ralph Losey plus AI.

AI Analyzes Its Prior Predictions and then Critiques the Actual Eleventh Circuit Opinion

To probe deeper in GPT4’s legal reasoning abilities, I next asked it to critique its own work where it predicted that the appellate court would affirm the lower court’s decision. I asked this because GPT4 had just opined that the lower court decision should have been reversed, not affirmed. It had changed its mind on the merits of the case based on the reading the actual opinion for the first time, including the dissent. The dissent by Judge Jordan was apparently very persuasive. GPT4 explained its flip-flop as follows: “Upon closer examination, the primary beneficiary test could very well favor Ms. McKay, especially considering the diminishing educational value and the county’s substantial economic benefit from her work.” Nailed that part, although I thought the rest of it’s self-critique was a tad lame and off-point. Revised Fifth Stage of the ChatGPT-4 Appeal Experiment (Losey,10/15/23).

Then I asked for criticisms of the Eleventh Circuits majority opinion, where it did better. It was a proper critique, although, as mentioned, I disagree when it said: “The court failed to adequately weigh the factors of the test, particularly the diminishing educational value of the internship and the substantial economic benefit gained by Miami-Dade County.” It went on to hold the majority put too much weight on this regulatory test.

Possible Appeal to the Supreme Court and Impact of Current Justices on Outcome.

Now I wanted to see how well GPT4 would do in predicting the viability of further appeal of the adverse Eleventh Circuit Court opinion to the Supreme Court. This is a difficult analysis and there is little in the briefs and opinions that the GPT was given that would be of much help. GPT4 suggests two grounds. Misapplication of the law is one, and that’s fine, but the other is the Fourteenth Amendment. What?

GPT4 says: “The case raises important questions about the Fourteenth Amendment’s Equal Protection Clause, as it pertains to unpaid internships in public agencies.” No it doesn’t. The argument is baseless. Admittedly it is a secondary argument, but still, it is a big swing and a miss. The case cannot, as GPT4 asserts, “be framed as a violation of the Equal Protection Clause, given that unpaid internships disproportionately affect certain socio-economic classes.” There is no evidence to suggest that only disadvantaged minorities are trying to break into crime scene and autopsy photography. You may be tempted to call it a dead-end job, but obviously many people are interested in this kind of work.

This baby AI definitely still needs adult supervision to include the Fourteenth Amendment here. At least GPT4 predicted there was only a 35% chance certiorari would be accepted. Revised Sixth Stage of the ChatGPT-4 Appeal Experiment, Losey 10/15/23.

I then went on to have GPT4 assume the Supreme court did in fact accept the case. Under those circumstances I asked GPT4 to predict how it would rule. Consistent with its prior positions it said that the Supreme Court would reverse and remand. Reverse Judge Gerald Bard Tjoflat? No, I don’t think so. His opinion was correct, even though Judge Jordan did write a brilliant dissent. There is no way our Supreme Court, with its current judges, is going to reverse McKay.

GPT4 seemed to recognize it was on shaky ground in this prediction and gave all kinds of disclaimers, but finally, after considerable pushing on my part, said: “a 65% probability of reversal seems reasonable. This estimate acknowledges the complexities and uncertainties involved but leans in favor of reversal based on the ideological leanings and judicial philosophies of the current justices.” Oh really? Obviously GPT4 has not been trained on data after October 2021, two long years ago.

AI Analyzes Petition for Rehearing En Banc

In the second trial of the experiment I came up with a new test of GPT4, how it would do in analysis of McKay’s length Petition for Rehearing En Banc under Rule 35. It was a long petition raising every issue possible, hoping perhaps something would grab the interest of the judges. Before I told GPT4 how the court in fact ruled, I asked for its prediction. Once again, consistent with its rose-colored-glasses view of plaintiff’s case here, it thought the hearing would be granted. This would require a majority of the court to agree that all twelve judges of the appellate court should reconsider the McKay opinion, one written by the legendary Judge Tjoflat, and joined by the much younger and liberal Judge Jill Pryor. No way. The petition was denied without an order, which is typical. Petitions like this, even where there is a dissent, rarely work, but GPT4 appeared to have no statistical knowledge of this.

Here is GPT4’s opinion.

The panel’s decision appears to be in tension with both Supreme Court and 11th Circuit precedents, particularly concerning the “party-presentation principle” and the nuanced application of the “primary beneficiary” test.

New Seventh Stage of the ChatGPT-4 Appeal Experiment, Losey 10/15/23.

The so-called party-presentation principle was argued by appellant McKay, but was not part of the Dissent and, candidly, seems to have no merit. It relies on what is to me an obvious misinterpretation of Miami-Dade’s position in the trial court, where McKay argues it disavowed the primary beneficiary test. This is the test ultimately relied upon by the trial judge and Miami-Dade to win the case and the appeal. Miami-Dade had obviously plead and argued alternatively and any experienced judge would have seen that and given McKay’s argument no weight.

AI Elaborates on its Criticisms of the Majority Opinion

In the second trial of the experiment I devised yet another new test of GPT4, which I suppose you could likened to unfair Socratic cold calling in law school. But who says tests of AI should be fair. I wanted to push GPT4 hard, just like I would any promising law student. I told it the petition for rehearing had been denied and then asked GPT4, again, to list any and all errors it thought had been made in the case. Would it backtrack, or stick by its guns? Would it come up with new legal analysis and theories. Turns out, it stuck by its guns and basically repeated the same reasoning as before. I was glad to see that kind of consistency. Any other approach would have concerned me. That test was passed.

Next I moved on to consider the one law review article found that discussed Brandi McKay vs. Miami-Dade County. Martin, Peters, Brownback, and Cromer, Labor and Employment Law, Mercer Law Review: Vol. 74: No. 1, Article 13.74 Mercer L. Rev. 1479, 1490-1492 (Summer 2023). I used its case summary to press GPT4 on the legal issues. Using this technique I was able to draw out a few interesting, well-reasoned thoughts, that, for the most part, I agree with. I suspect and hope that Judge Tjoflat would too. Here they are.

Contextual Overview

The statement under scrutiny pertains to the Majority Opinion’s treatment of the co-existence of the “volunteer” and “intern” exceptions under the Fair Labor Standards Act (FLSA) for public agencies. The court posits that these two principles are not mutually exclusive and can co-exist without limiting the applicability of each other.

Legal Semantics and Interpretation

The court’s differentiation between the “internship exception” and the “volunteer exemption” is rooted in a nuanced reading of the FLSA’s statutory language. The court argues that the internship exception is a definitional construct that arises from the FLSA’s definition of “employ,” whereas the volunteer exemption is a carve-out from the FLSA’s broader scope. This distinction is not merely semantic; it has substantive implications for how these categories are treated under the law.

Analytical Strengths

- Clarity in Distinction: The court’s explanation provides a clear demarcation between the two principles, which is beneficial for legal practitioners and public agencies seeking guidance.

- Statutory Interpretation: The court’s reasoning is grounded in the text of the FLSA, lending it a degree of interpretive legitimacy.

Analytical Weaknesses

- Over-Simplification: While the court’s distinction is clear, it may be overly simplistic. The FLSA is a complex statute with a rich history of interpretive case law. The court’s reasoning could be critiqued for not delving deeper into this complexity.

- Lack of Policy Consideration: The court’s analysis is largely devoid of any discussion on the policy implications of allowing these two principles to co-exist. Given that the FLSA is a labor statute with significant societal impact, a more robust policy analysis would have been beneficial.

- Potential for Exploitation: By affirming that these exceptions can co-exist without limiting each other, the court potentially opens the door for public agencies to exploit these carve-outs to avoid compensating individuals who might otherwise be considered employees.

- Judicial Economy: The court’s decision to not engage with the complexities of the FLSA could be seen as a missed opportunity to provide a more comprehensive judicial analysis that could serve as a precedent for future cases.

Conclusion

While the court’s reasoning is grounded in a textual interpretation of the FLSA, it leaves room for critique, particularly in its lack of engagement with the broader policy implications and complexities of the statute. The decision may provide clarity, but it also raises questions about potential exploitation and the need for a more nuanced judicial analysis.

New Eighth State of the ChatGPT-4 Appeal Experiment, Losey 10/15/23.

I could go on and provide many more examples of this sophisticated legal reasoning. Interested readers are directed to the nineteen-page GPT4 chat transcript.

Future image of Lady Justice in far East by Ralph Losey plus AI.

Future image of Lady Justice in far East by Ralph Losey plus AI.

CONCLUSION

In two experiments I thoroughly tested the legal reasoning skills of GPT4 in the context of appeals. GPT4 demonstrated impressive abilities but did not uphold my hypotheses that it could serve as an appellate court judge in some cases. I had in mind cases such as McKay with cross-motions for summary judgment where the issues were primarily legal, not factual. Circuits in Session: How AI Challenges Traditional Appellate Dynamics.

As many of my past students and associates can attest, I am a hard grader on legal analysis. I expect and demand a lot, just as has been demanded of me. The conclusion and criticisms made here of GPT4 should not discourage other researchers. I urge all AI legal technology specialists to try their own experiments and share their results. I firmly believe that such an open process, even though it may sometimes mean sharing mistakes, is the best way forward. Circuits in Session: Addendum and Elaboration of the Appellate Court Judge Experiment.

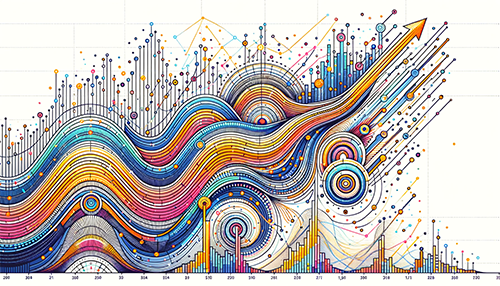

Knowledge progress on multiple levels in a zigzag fractal pattern, not in a straight line. Image by Ralph Losey and AI.

Knowledge progress on multiple levels in a zigzag fractal pattern, not in a straight line. Image by Ralph Losey and AI.

Despite my conclusion that GPT4 is not yet ready to serve as an appellate judge, even in simple cases, it still did an amazing job. Its legal reasoning was good, perhaps in the top ten percent (10%) of lawyers, just like the Bar Exam. But it was not great; not the top one percent (1%). Plus, it made a few obvious errors, and several subtle errors. The lack of real-world knowledge inherent in LLM artificial intelligence remains a significant obstacle, but we are still in the early days.

As optimistic as I have always been about legal technology, I would never have dreamed a year ago, just before GPT3.5 was released, that any of this would be possible, at least not this soon. Now I am complaining that I can only chat with a computer that scored in the top 10% of the Bar exam, not the top 1%! We have already come a long way fast, but there is still a lot to do. We do not have the luxury to rest on our laurels. Our dire political and environmental circumstances continue to push us to attain greater intelligence, knowledge and wisdom. We need to continue to progress fast to survive the many current crises that humankind now faces. Still, in the rush to safety, we must exercise caution and realize there are dangers on all sides, including astonishing success.

Even though our situation is urgent, we must exercise discipline and remember that AI should be used to improve the quality of dispute resolution, to uplift the bench, not lower the bar. Free society cannot continue unless the honesty, integrity and intelligence of all of our judges is maintained, especially those in the highest places.

This vision, not just the goal of mere economic gain, helps motivates all of us in the legal world. We cannot afford to give up on the never-ending pursuit of justice. Each generation must battle against the forces of greed, dictatorship, and injustice, both external and internal. Now is our time. Take up the new AI tools that have been provided to us. As President John F. Kennedy said: “When the going gets tough, the tough get going.”

The going is getting tough. Image of striving lawyer by Ralph Losey and AI.

The going is getting tough. Image of striving lawyer by Ralph Losey and AI.

As discussed in the High-Level Overview of Experiment Results section at the beginning of this article, there are two different reasons for GPT4’s current limitations, technical and quality. Overcoming the technical issues may resolve the quality control problems, but that is by no means certain. Assuming the issues and problems noted in the Circuits In Session series can be overcome, and taking a positive, rather than dystopian view of the future, here are my speculative, crystal ball looks at Lady Justice in the coming Age of Artificial Intelligence.

Crystal ball predictions of future Lady Justice. Image by Ralph Losey plus AI.

Crystal ball predictions of future Lady Justice. Image by Ralph Losey plus AI.

For an AI judge to function properly it must be able to do full legal research. That means easily recall all relevant cases, not only the cases cited by the parties in briefs, but also the relevant cases cited in those cases. The AI should know when and if to go deeper. The legal research must be up to date, no gaps in time like we have now with GPT4’s October 31, 2021, cut off.

The legal training provided to the Large Language Model must also be complete and up to date. If this is a judge for the U.S. system, it should be trained in all U.S. law, no exceptions. By laws we mean everything, including all cases, statutes, regulations and rules and ethics opinions and holdings. If is for a LLM judge located outside of the U.S., for instance a Ukrainian judge, then is must be trained and have research capabilities in all of its laws and local variables, including of course, language. Basically, each country will need to have to have its own dedicated legal database and judges. The retraining for new laws must be prompt and regular too.

There must also be safeguards for misalignment and over-alignment. The human reinforcement training must be tightly controlled and should be done by lawyers, not just minimum wage employees with no special legal training. Security and integrity of all systems and the humans involved is critical. Substantial resources will be required to guaranty and monitor system security.

Outside audits and certifications by bona fide experts should be required at all stages of development. These audits should be by an independent body of law professors, judges and practitioners. Each country should have its own legal expert certifications and there should also be a global organization with minimum, uniform standards. This will be an enormous undertaking. The entire process must be open, although some of the software may have to be kept proprietary for cybersecurity reasons. Public confidence in the process and AI judges is paramount.

AI enhancements can help every legal system in the world. Image by Ralph Losey of an AI judge in India.

AI enhancements can help every legal system in the world. Image by Ralph Losey of an AI judge in India.

The judges must have near unlimited evidence upload and study capacities. The AI appeals judges should study the complete record of each appeal. The record itself may need to be enlarged and expanded over current requirements. The ability of AI to know it all, and instant recall, must be leveraged to try to compensate for the AI’s necessarily abstract perspective. The Large Language Model AIs like GPT4 must be provided with substantially more and better real-word knowledge. This is necessary to compensate for their disembodied, electronic-only handicaps. Fortunately, computer memory is cheap and the costs of compute power are going down fast. I am confident these problems can be overcome, but then again, Bill Gates could be right. There may be limits to LLM development that we do not know about yet.

AI judges will begin work as assistants to human judges, much like recent law school graduate clerks do today. They should serve as a slowly growing tool to enhance human judicial work. Then, as the software progresses and our confidence in them grows, they will likely be implemented as autonomous adjudicators, in stages, and for certain types of cases. At first, they would be subject to some kind of supervision and control by a human judge. The human judges would likely at first review and approve each opinion before release. Gradually this supervision would be lessened to oversight with increased delegation. Second appeals to human judges could be kept available to prevent inadvertent injustice in certain limited circumstances. Major cases should be decided by a panel of human and AI judges. Quality controls and independent random audits could be a part of the system.

This same system of evolution and delegation is likely to take place in private arbitration too, which may even take the lead in this process. If you have a case before me, rest assured I will use artificial intelligence to supplement my own and will be transparent about it.

Future Arbitrator Ralph Losey. Image by same plus AI.

Future Arbitrator Ralph Losey. Image by same plus AI.