Introduction

In our first post, we will dive into the world of email datasets. Emails are a critical component of business communication, and AI plays a significant role in managing, sorting, and even responding to them. We will explore how precision and recall can be applied to document-based datasets to evaluate the effectiveness of AI in handling email communications. This post is designed to set a strong foundation for understanding how to measure AI success in processing and analyzing written content.

AI metrics in legal document analysis

In the legal sector, there are many times when we might want to take a corpus of documents and split them into different categories, such as finding responsive content for first-pass review. This is typically a long and laborious process to complete by hand. Many tools are out there that can help with these classification tasks, and it can be useful to compare how they perform.

The challenge of classifying legal documents

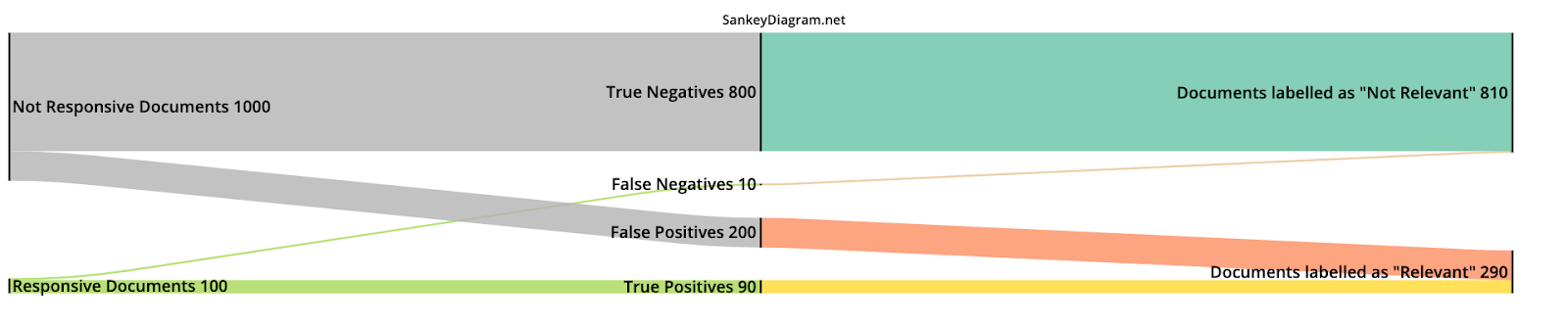

Suppose you have a corpus of emails and a definition of a “responsive” document, with all other documents being “non-responsive.” Let’s say that the corpus has 1,100 documents in total, and 100 of them are responsive, but you don’t know which ones they are. You set your team to work to classify the documents into “Relevant” and “Not Relevant” and get the results shown below.

In this example, your team correctly found 90 out of 100 responsive documents, which means that they achieved a “Recall” of 90%. At the same time, they rejected 800 out of 1,000 Non-responsive documents, which means they achieved a “Rejection” of 80%. In an ideal world, both Recall and Rejection would be 100%, but this is usually not possible, especially for a subjective classification task like first-pass review.

Understanding recall and rejection metrics

In this example, we introduced two terms:

- Recall: The fraction of responsive documents correctly classified as “Relevant”

- Rejection: The fraction of not responsive documents correctly classified as “Not Relevant”

These help us to understand the performance of the classification method, and they are in tension with each other. Increasing Recall unusually means decreasing Rejection, and vice versa.

Precision: A key measure of classification success

Another way to measure how well a method performs is to calculate the “Precision.” In the example, Precision is equal to the fraction of documents that are classified as Responsive that are actually Relevant. In the example above, there were 290 documents classified as Responsive, but only 90 of these were actually Relevant. This gives a Precision of 90 / 290 = 31%. This Precision isn’t great, as it means that you will spend 69% of your time in the next stage considering content that is not relevant.

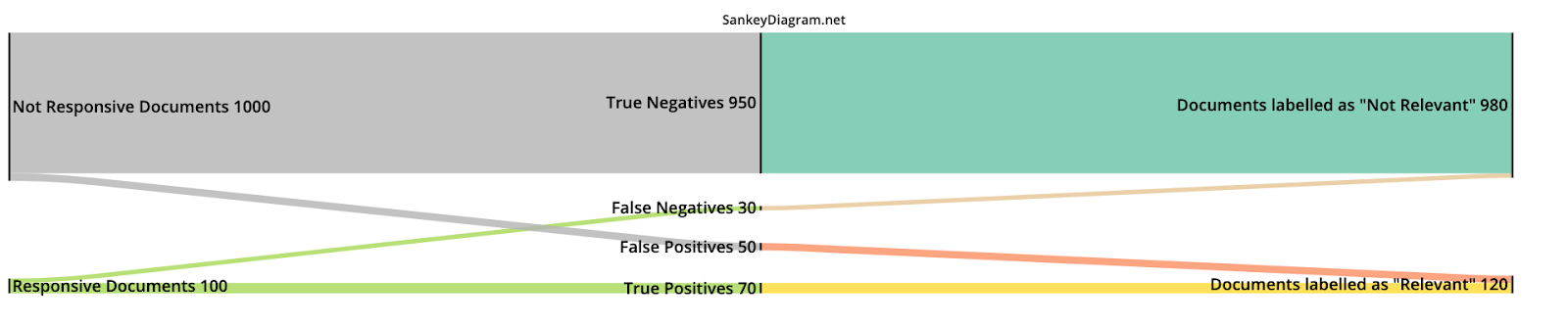

You go back to your team and tell them to perform the first-pass review again, but this time to be more strict about what content they label as “Responsive.” They come back with the following results:

This time, the Rejection increased to 95% (950/1000), and the Precision increased to 58% (50/120), but the Recall fell to 70% (70/100). By having a stricter definition of “Relevant,” you lost nearly one-third of the Responsive emails.

Balancing recall, rejection, and precision

In general, it’s important to keep Recall high so that you keep as many Responsive documents as possible, keep Rejection high so that you reject as many Non-Responsive documents as possible, and, as a result, keep Precision high so that you will spend a higher proportion of your time considering Responsive content. This has always been a challenge in legal review, and datasets keep getting bigger and more diverse, making it increasingly difficult to find the most relevant content.

Human variability in document classification

This challenge is made harder by the interpersonal and intrapersonal variability humans exhibit when making decisions. Different people will take different approaches and reach different conclusions. The same person may classify documents differently depending on their mood, experience of the case, or factors such as time pressure. In any case, we will never know the true values of Recall, Rejection, and Precision. We can only estimate based on past experience.

The role of technology in enhancing efficiency

We can use technology to speed up the classification process, which works quite well for documents. Technology-Assisted Review (TAR) and Continuous Active Learning (CAL) have been around for a while and help separate Relevant from Non-Relevant content more efficiently while estimating Recall and Precision. However, they still involve a fairly large amount of manual labor and require well-defined documents.

The future of document classification with Generative AI

With the advent of Generative AI, it is possible to use Large Language Models (LLMs) to develop even more powerful tools with the possibility of completely removing human labeling. LLM-based classification can typically achieve a Recall of 90% (with a Rejection of 63% and Precision of 71%) out of the box for email. While there will probably always be a place for TAR and CAL in a review workflow, larger and more complex datasets will start to depend more and more on LLMs instead, and we will need to start adopting LLMs to stay competitive.

Looking ahead: Conversational data analysis

Next time, we’ll examine Recall, Rejection, and Precision in the context of streams of conversational data, such as Slack and Microsoft Teams. What is easy to define for emails is harder to define for conversational data.

[View source.]