The EU AI Act and its Legislative Process

On 8 December 2023, as a crucial step in the legislative process, the European Parliament and the Council reached a political agreement on the essential parts of the AI Act (see our article of 11 December 2023). Once the final wording is coordinated, the AI Act will be formally approved and enter into force following its publication in the EU’s Official Journal (which is expected in the coming weeks).

The AI Act aims to create a regulatory framework for AI systems in the European Union. It will provide rules for AI systems that are placed on the market or put into service in the EU, - regardless of where the provider of the AI system is located - for users of AI systems, and for the use of output produced by AI systems in the EU, even if the providers and/or users of such AI systems are not located in the EU. The most crucial definitions to watch out for are as follows:

The AI Act will, therefore, potentially affect in particular (i) any company whose management and/or employees use AI tools in daily work; (ii) any company that customizes an AI tool and implements this for internal or external use; as well as (iii) any company that develops its own AI model. The recent practice shows that use of sophisticated AI tools for daily employment tasks, and even the customization of third party AI models will be very widespread and thus significantly more companies will be subject to the AI Act regulatory framework than initially thought in 2021.

Risk-based approach and rules for general-purpose AI models

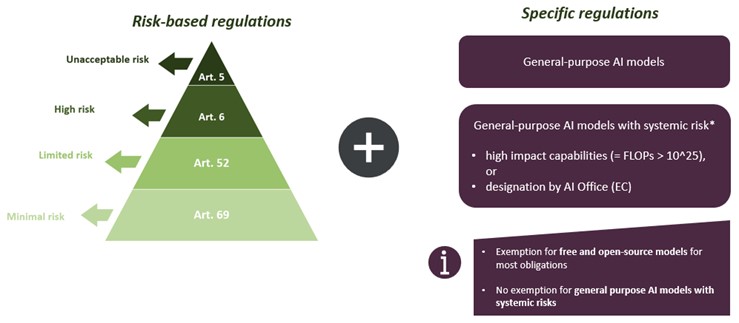

Discussions on whether and how general-purpose AI models ("GPAI" models, trained on large amounts of data to perform different tasks such as generating text, computer code, video, images or conversing in lateral language) should be specifically regulated continued until the day of the political agreement. In the end, the rapid development and use of so-called large language models in 2023 led European lawmakers to consider regulating necessary as well as to distinguish between those rules and obligations that apply to all GPAI models and those additional ones that apply to particularly powerful GPAI models with “systemic risk” (read more in our article here).

The following graphic shows the concept of the AI Act’s approach to regulating different AI systems (based on an unofficially published compromise text regarding GPAI models, which can be found here):

In particular, the AI Act will establish detailed and comprehensive obligations and requirements for so-called "high-risk AI systems". These obligations essentially comprise four categories: Transparency obligations, data governance, and risk management as well as IT security obligations.

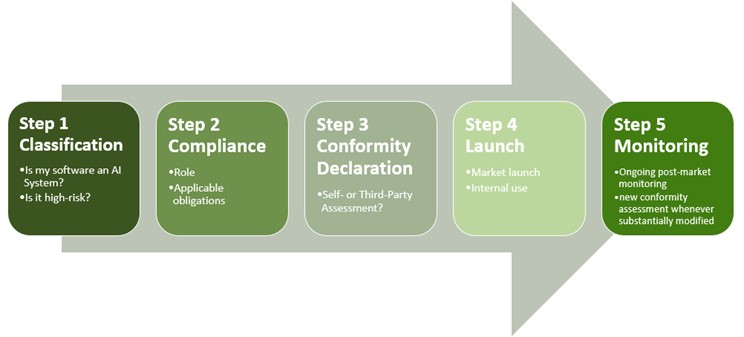

Regarding high-risk AI systems, two big groups are currently foreseen: AI systems that are intended to be used as components of products falling under existing safety legislation (e.g., the EU Medical Device Regulation) and AI systems that fall under (purpose-related) specific areas (standalone high-risk AI systems). The following graphic shows our proposal to approach AI Act compliance for high-risk AI systems in five steps:

Other relevant EU laws to look out for

In particular, the AI Act, which can be seen as a specific product safety regulation, will be accompanied by adapted and new EU AI liability rules. The Council and the European Parliament reached a political agreement on a revised Product Liability Directive on 14 December 2023. Once formally adopted and in force, it will replace the current Product Liability Directive, which dates back to 1985. The aim and purpose of the revision are to adapt Europe's product liability rules to the digital age (e.g., to include AI systems, software, and updates in its scope), the circular economy, more complex supply chains, and a better balance in the enforcement of the right to compensation.

In addition, the European legislator has proposed a first-of-its-kind AI Liability Directive, which would establish a civil liability regime for damage caused by AI-enabled products and services and is seen as the "other side of the same coin" in the context of the AI Act. The proposal is expected to be discussed in Q1 2024.

For further information please read our following articles:

Data protection and intellectual property laws, regulations and regulatory authorities' guidance should also be considered in parallel to the AI Act.

The work ahead

The various new requirements and potentially severe implications under the AI Act require timely and thorough preparation by companies affected by the new rules:

- Impact and Gap Analysis: The first step in preparing for the AI Act is to analyze the impact of the AI Act on the company, its business, and the intended use cases for AI systems, and to carry out a compliance gap analysis.

- Compliance Strategy: For companies affected by the AI Act, developing an appropriate AI governance framework (including appropriate digital governance structures, digital governance processes, and AI policies) is one of the essential elements not only to ensure compliance with the legal requirements under the AI Act (and other legal regimes) but also to secure a continued fruitful and future development and/or use of AI systems.

- Compliance Documentation & Monitoring: New documentation and monitoring obligations require companies to rethink how they draft and manage their documentation and monitoring structures.

Outlook

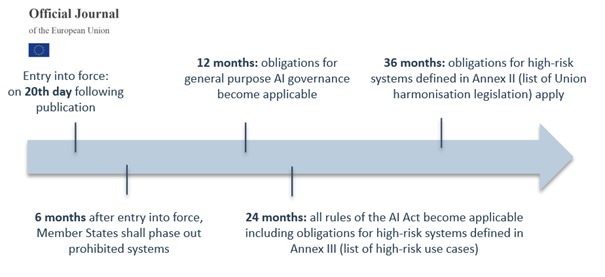

The AI Act will enter into force the twentieth day following its publication in the EU’s Official Journal (which is expected in the coming weeks). It will generally apply after 24 months from the date of its entry into force. However, while most provisions, including obligations for high-risk systems defined in Annex III (list of high-risk use cases), will be effective from this date, certain obligations will already apply after six months (for the phase-out of prohibited systems) and twelve months (obligations for GPAI). In contrast, other provisions will only become applicable at a later stage after 36 months (in particular, obligations for high-risk systems defined in Annex II, the list of Union harmonisation legislation):