Introduction

On 1 and 2 November 2023, the UK hosted the world's first summit on artificial intelligence safety at Bletchley Park (the Summit). Once the top-secret home where Britain's World War II cryptographers decoded secret enemy messages, the choice of venue for the Summit suggests a symbolism to some that the United Kingdom will once again be at the forefront of decrypting complex codes – in this case, artificial intelligence (AI).

There is no denying that the technological landscape and the financial services industry has irrevocably changed in the last two decades. Since the mass hysteria of technology changes on the turn of the new millennium (and its associated Y2K 'madness'), the industry has experienced wave after wave of technological developments, entering now into what one might perhaps consider as the official beginning of the era of AI, or the 'AI Age'.

This article will explore the existing regulatory framework of AI within the UK financial services industry, make a brief comparison against the global landscape and attempt to predict what lies ahead.

Key Building Blocks or Puzzle Pieces?

In 2019, the Bank of England (BoE) and Financial Conduct Authority (FCA) (jointly, the Regulators) conducted a joint survey to gain understanding on the use of machine learning (ML) in the UK financial services industry (the First ML Survey). The First ML Survey was sent to almost three hundred firms, including banks, credit brokers, e-money institutions, financial market infrastructure firms, investment managers, insurers, non-bank lenders and proprietary trading firms, with a total of 106 responses received. The Regulations subsequently published a joint BoE and FCA report,1 analysing the responses (the First ML Report). Most notably, it found that: (i) ML had the potential to improve outcomes for businesses and consumers; (ii) ML was increasingly being used; and (iii) there was a need for an effective and evolving risk management framework.

In response to the First ML Report, the Regulators launched the UK's Artificial Intelligence Public-Private Forum (AIPPF) in October 2020. The aim of the AIPPF was to further the dialogue between experts across financial services, the technology sector, and academia with the aim of developing a collective understanding of the technology and exploring how the Regulators could support the safe adoption of AI (and ML as a subset of AI) in financial services. In February 2022, the AIPPF published its final report,2 exploring various barriers, challenges and risks relating to the use of AI in financial services and potential ways to address them (the AIPFF Report). The AIPPF Report made it clear that the private sector wanted regulators to have a role in supporting the safe adoption of AI and ML, if they are to benefit from the deployment of such technology.

Around the same time, the UK government (the Government) sought to develop a national position regulating AI. In July 2022, it published Policy Paper 'Establishing a pro-innovation approach to regulating AI' (the First PP).3 The First PP made it clear that the AI was seen as a tool for 'unlocking enormous opportunity' and proposed to establish a framework that was:

- context specific, by proposing to regulate AI based on its use and impact within a particular context, and to delegate the responsibility for designing and implementing proportionate regulatory responses to regulators;

- pro innovation and risk-based, focusing on 'high risk concerns' rather than on 'hypothetical or low risks' associated with AI, in order to encourage innovation;

- coherent, by creating a set of cross-sectoral principles tailored to AI, with regulators at the forefront of interpreting, prioritising and implementing such principles within their sectors and domains; and

- proportionate and adaptable, with regulators being asked to consider 'lighter touch options' (such as guidance or voluntary measures) in the first instance and where possible, working with existing processes.

On 11 October 2022, the Regulators conducted a second survey into the state of ML in UK financial services (the Second ML Survey).4 The Second ML Survey identified that the adoption of ML had increased since 2019 and financial services firms were using their existing data governance, model risk management and operational risk frameworks to address the use of AI and ML. The Second ML Survey also highlighted that there was a need for effective and evolving risk management controls by the Regulators to use AI and ML safely.

On the same day, the Regulators and the Prudential Regulation Authority (PRA) also published Discussion Paper 5/22 'Artificial Intelligence and Machine Learning'5 in response to the AIPPF Report (DP5/22), and in line with the First PP. Most notably, DP5/22:

- aims to seek an understanding of how the FCA and PRA, as supervisory authorities, may best support the safe and responsible adoption of AI in the UK financial services industry in line with their statutory objectives;

- explores whether there should be a regulatory definition for the term 'AI' in the supervisory authorities' rulebooks to underpin specific rules and regulatory requirements, or whether an alternative approach should be adopted;

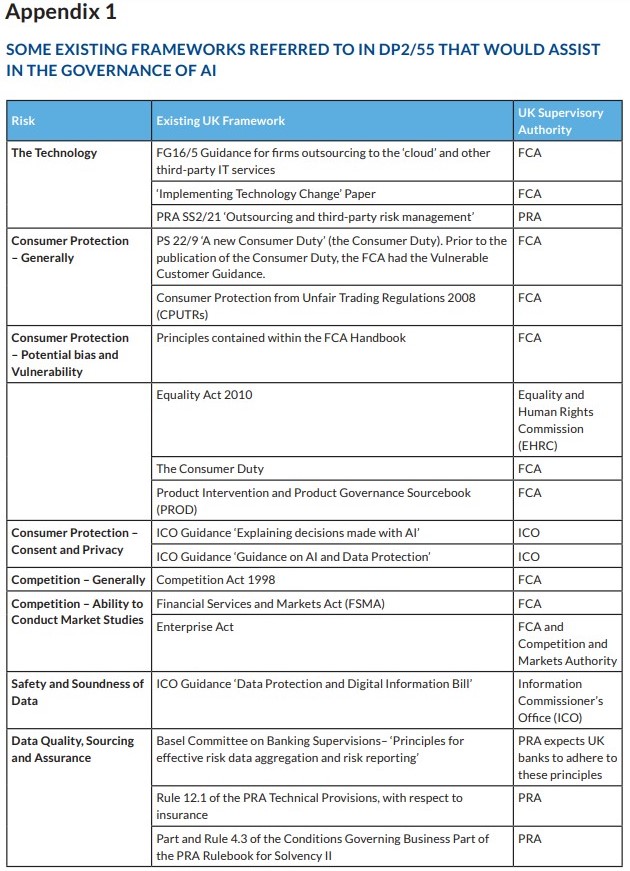

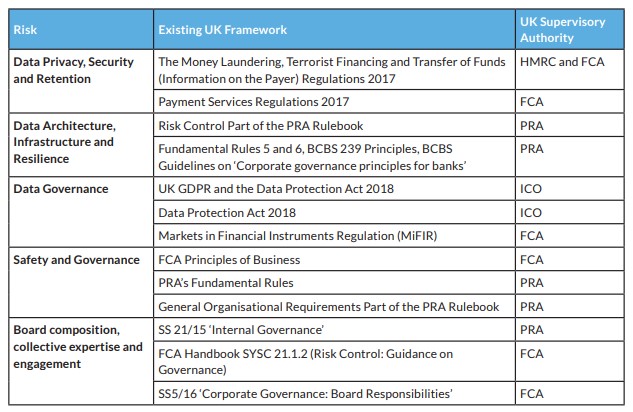

- assesses how AI may use existing UK legal requirements and guidance, referred to in Appendix 1; and

- highlights the importance of human involvement in the 'decision loop' when using AI, as a valuable safeguard against harmful outcomes. DP5/22 further highlights that current Model Risk Management regulation in the UK6 is very limited to address the associated risks of AI in the realm of ethics, accuracy and cybersecurity.

In December 2022 and in line with the First PP, the Government set out an ambitious ten-year plan7 to make the UK 'a global AI superpower' (the National Strategy).

On 23 March 2023, the Government published a Policy Paper entitled 'Establishing a pro-innovation approach to regulating AI'8 (the Second PP). The Second PP confirms the intention to create a 'light touch' framework, seeking to balance regulation and encourage responsible AI innovation. Further, it confirms the intention to use existing legislative regimes, coupled with proportionate regulatory intervention, to create 'future-proof framework' which is adaptable to AI trends, opportunities and risks.

Most recently, on 26 October 2023, the Regulators published Feedback Statement FS2/23 'Artificial Intelligence and Machine Learning (FS2/23),9 providing a summary of responses to DS2/55. The Regulators have made it clear within FS2/23 that the document does not include policy proposals, nor signals 'how the supervisory authorities are considering clarifying, designing, and/or implementing current or future regulatory proposals' on AI. Notable responses raised by respondents include:

- a financial services sector-specific regulatory definition for AI would not be useful as it may (i) quickly become outdated; (ii) be too broad or narrow; (iii) encourage incentives for regulatory arbitrage; and (iv) conflict with the intended 'technology-neutral' approach;

- a 'technology-neutral', 'outcomes-based', and 'principles-based' approach would be effective in supporting the safe and responsible adoption of AI in financial services;

- further regulatory alignment in data protection would be useful as current data regulation is 'fragmented';

- consumer protection, especially with respect to ensuring fairness and other ethical dimensions, is an area for the supervisory authorities to prioritise; and

- existing firm governance structures and regulatory frameworks such as the Senior Managers and Certification Regime are sufficient to address AI risks.

The Global Landscape

United Kingdom – Pragmatic

Given the above, one could summarise the UK's AI regulatory landscape in financial services using buzzwords like 'optimistic,' 'light-touch', 'industry-led' and 'technology neutral', with a focus on keeping senior managers ultimately accountable. These words may appear to be new or exciting, but in our view, it is very much in line with how the UK has historically regulated new technologies.

By way of an example, the UK did not create an entirely new regime for cryptoasset activities, but rather categorised it as a new asset class and brought them within the UK's existing regulatory perimeter. Another example includes the adoption of algorithmic processing into the MiFID framework. In other words, the UK has historically used a pragmatic approach to using the systems it already has in place with slight tweaks – and we suspect it will be the same with AI.

Europe – Extensive AI Regime

Across the English Channel, the approach in the EU is slightly different. In April 2021, the European Commission proposed the first EU regulatory framework for AI (the Draft AI Act). Broadly, the Draft AI Act:

- assigns applications of AI to three risk categories: (1) applications and systems that create an unacceptable risk (e.g., government-run social scoring of the type used in China) are prohibited; (2) high risk applications (e.g., CV scanning tool that ranks job applicants) are subject to legal requirements; and (3) low or minimal risk applications are largely left unregulated;

- seeks to establish a uniform definition for AI that could be applied to future AI systems;

- aims to establish a European Artificial Intelligence Board, which would oversee the implementation of the regulation and ensure uniform application across the EU. The body would be tasked with releasing opinions and recommendations on issues that arise as well as providing guidance to national authorities; and

- provides that non-compliant companies will face steep fines up to EUR 30 million, or if the offender is a company, up to 6 percent of its total worldwide annual turnover for the preceding financial year, whichever is higher.

Until the EU reaches an agreement on the Draft AI Act, various stakeholders are currently governed by the application of existing laws and regulations (such as the EU's General Data Protection Regulation), and to an extent, self-regulation by corporates by adhering, for example, to the voluntary ethical guidelines for AI published by the European Commission10 or Microsoft.11

More recently on 17 October 2023, the European Securities and Markets Authority (ESMA) published a speech entitled 'Being ready for the digital age' (the Speech). The Speech focused on technological developments and their impact on the operations of financial institutions. Specifically, it described that it is working on new projects with EU national regulatory authorities to create 'sandboxes' for testing AI systems in a controlled environment.

Overall, the EU is expected to provide a more comprehensive range of legislation tailored to specific digital environments.

United States of America – Growing Body of AI Guidance

In the United States, the landscape is slightly different with a growing body of AI guidance.

In April 2020, the Federal Trade Commission published guidelines on AI usage and expectations for organisations using AI tools. Shortly thereafter in October 2022, the United States House Office of Science and Technology Policy (OSTP) released a Blueprint for an AI Bill of Rights, providing a framework to 'help guide the design, development, and deployment of [AI] and other automated systems' with the aim of protecting the rights of the American public.12

In July 2023, the White House reached an agreement with top players in the development of AI, including Amazon, Google, Meta, Microsoft and OpenAI to adhere to eight safeguards.13 These include guidance surrounding third-party testing of the technology to ensure that AI products are 'safe' ahead of their release. According to the press,14 these voluntary commitments are meant to be an immediate way of addressing risks ahead of a longer-term push to get US Congress to pass laws regulating the technology.

What Happens Next?

It seems to us that AI regulation in the UK financial services sector appears to be at an inflexion point, and it will be very interesting to see the policies that the Regulators will design and/or implement following FS2/23.

Looking forward, we expect to see:

- further consultations from the FCA and PRA on how the current senior management and certifications regime can be amended to respond quickly to AI, including establishing controls requiring upskilling;

- issuance of technology-specific rules and guidance, as appropriate;

- further consultations on how existing UK guidance or other policy tools may be clarified or amended to apply to AI and other new technology;

- development of FCA and PRA sector-specific guidelines for AI development and use; and

- new AI policy statements issued by the Government, following the Summit.

1 Bank of England, 'Machine learning in UK financial services' (16 October 2019), https://www.bankofengland.co.uk/report/2019/machine-learning-in-uk-financial-services.

2 Bank of England, 'The AI Public-Private Forum: Final Report' (17 February 2022), https://www.bankofengland.co.uk/research/fintech/ai-public-private-forum.

3 UK Government, Policy Paper, 'Establishing a pro-innovation approach to regulating AI' (20 July 2022), https://www.gov.uk/government/publications/establishing-a-pro-innovation-approach-to-regulating-ai/establishing-a-pro-innovation-approach-to-regulating-ai-policy-statement.

4 Bank of England, 'Machine learning in UK financial services' (11 October 2022), https://www.bankofengland.co.uk/report/2022/machine-learning-in-uk-financial-services.

5 Bank of England, DP5/22 – Artificial Intelligence and Machine Learning (11 October 2022), https://www.bankofengland.co.uk/prudential-regulation/publication/2022/october/artificial-intelligence.

6 In May 2023, the PRA published Policy Statement 'Model Risk Management Principles for Banks' (PS6/23). Respondents supported the PRA's proposals to raise standard of model risk management practices, and recognised the need to manage risks posed by models that have a material impact on business decisions. In PS6/23, the PRA expressed that it would consider the outcome of DP5/22, together with the results of other ongoing surveys and consultations to inform any decisions on further policy actions.

7 UK Government, 'Guidance: National AI Strategy' (18 December 2022), https://www.gov.uk/government/publications/national-ai-strategy/national-ai-strategy-html-version.

8 UK Government, Policy Paper, 'A pro-innovation approach to AI regulation' (3 August 2023), https://www.gov.uk/government/publications/ai-regulation-a-pro-innovation-approach/white-paper#:~:text=our%20policy%20paper%20proposed%20a,in%20ai%20and%20innovate%20responsibly.&text=this%20approach%20was%20broadly%20welcomed%20%e2%80%93%20particularly%20by%20industry.

9 Bank of England, FS2/23 'Artificial Intelligence and Machine Learning' (26 October 2023), https://www.bankofengland.co.uk/prudential-regulation/publication/2023/october/artificial-intelligence-and-machine-learning.

10 European Commission, 'Ethics guidelines for trustworthy AI' (8 April 2019), https://digital-strategy.ec.europa.eu/en/library/ethics-guidelines-trustworthy-ai. These guidelines are not legally binding, but institutions are invited to voluntarily endorse them.

11 Microsoft, 'Empowering responsible AI practices', https://www.microsoft.com/en-us/ai/our-approach-to-ai.

12 The White House, 'Fact Sheet: Biden-Harris Administration Secures Voluntary Commitments from Leading Artificial Intelligence Companies to Manage the Risks Posed by AI' (21 July 2023), https://www.whitehouse.gov/briefing-room/statements-releases/2023/07/21/fact-sheet-biden-harris-administration-secures-voluntary-commitments-from-leading-artificial-intelligence-companies-to-manage-the-risks-posed-by-ai/#:~:text=the%20biden%2dharris%20administration%20published,in%20home%20valuation%20and%20leveraging.

13 The Guardian, 'Top tech firms commit to AI safeguards amid fears over pace of change' (21 July 2023), https://www.theguardian.com/technology/2023/jul/21/ai-ethics-guidelines-google-meta-amazon.

14 Ibid.